Strategic Integration of Artificial Intelligence into the Hospital Anatomical Pathology Workflow: A Practical Guide (Part 2)

In the first installment of this guide, we focused on what rarely makes the headlines: the invisible foundations that determine whether artificial intelligence (AI) becomes a transformative tool or just another forgotten experiment.

We saw that before discussing algorithms, hospitals must audit their workflows, ensure robust digitalization, and build infrastructure capable of handling the data load and integrating seamlessly with clinical systems.

But there comes a moment when all that groundwork is put to the test.

Leadership inevitably asks:

“So now what? When will we actually see AI working in our daily practice?”

This is where many implementations stall or fail—not because the technology itself breaks, but because real-world deployment in a hospital setting demands far more than a precise model.

It requires rigorous validation, cultural adaptation, and a phased approach that protects both clinical trust and operational efficiency.

In this second part of the series, we’ll explore how to turn a promising model into a viable, trusted solution within a hospital.

We’ll discuss:

Why validating an AI model is about far more than measuring accuracy.

How to move from a controlled pilot to full-scale adoption without disrupting services.

How to ensure the clinical team not only accepts, but truly trusts the tool.

How to govern data, mitigate bias, and guarantee explainability and ethical standards.

Because preparing the ground doesn’t guarantee success.

What you do when deploying AI will decide whether it becomes a competitive advantage—or a project left to collect dust.

Multidimensional Validation: More Than Algorithmic Accuracy

When a hospital begins testing an AI model, the focus often zeroes in on one metric: accuracy.

If the algorithm detects a tumor with 95% accuracy, it’s considered ready.

But in clinical reality, that single figure says very little about its true value.

Validating an AI solution isn’t about one percentage point. It’s a holistic process that must answer four essential questions:

Does the technology function as intended? (Technical validation)

Does the algorithm deliver on its promise, consistently? (Analytical validation)

Does it genuinely help clinicians make better decisions for patients? (Clinical validation)

Does it integrate into and enhance workflows, rather than disrupt them? (Operational validation)

If even one of these pillars fails, adoption will be fragile—and clinical trust nearly impossible.

1. Technical Validation: The Invisible Foundation

This focuses on infrastructure and data integrity:

Do WSI images faithfully represent the physical slide?

Are there artifacts, incomplete sections, or focus issues?

Do scanners and viewers meet minimum standards (resolution, calibration, data integrity)?

No model can be reliable if its foundational inputs are compromised.

2. Analytical Validation: Beyond Pretty Statistics

Here the question is whether the algorithm is accurate and robust in real-world conditions:

Does sensitivity and specificity hold when tested on your hospital’s data, not just public datasets?

Does performance degrade with variations in staining, lab processes, or batch quality?

How does it handle rare or low-quality cases?

Superficial validation builds a false sense of security that crumbles on first deployment.

3. Clinical Validation: From Data to Decision

Highlighting regions of interest or outputting metrics isn’t enough.

The model must improve diagnostic and therapeutic decision-making:

Does it reduce errors or inter-observer variability among pathologists?

Does it enable diagnoses or predictions previously out of reach?

Does it truly accelerate turnaround time (TAT) without sacrificing quality?

Clinical validation is what turns a model from “interesting” into “indispensable.”

4. Operational Validation: Trust and Workflow

A flawless model in the lab can still fail if it breaks the rhythm of the department:

Does it integrate seamlessly with LIS, PACS, and EMR?

Do clinicians actually use it—or avoid it?

Does it reduce workload or add unnecessary steps?

Operational validation often marks the difference between a successful pilot… and a forgotten rollout.

Remember

Multidimensional validation isn’t bureaucracy. It’s the bridge between a promising model and a trusted solution.

Only by testing across these four dimensions can a hospital ensure that AI is not just accurate in graphs, but useful, trusted, and sustainable in day-to-day clinical practice.

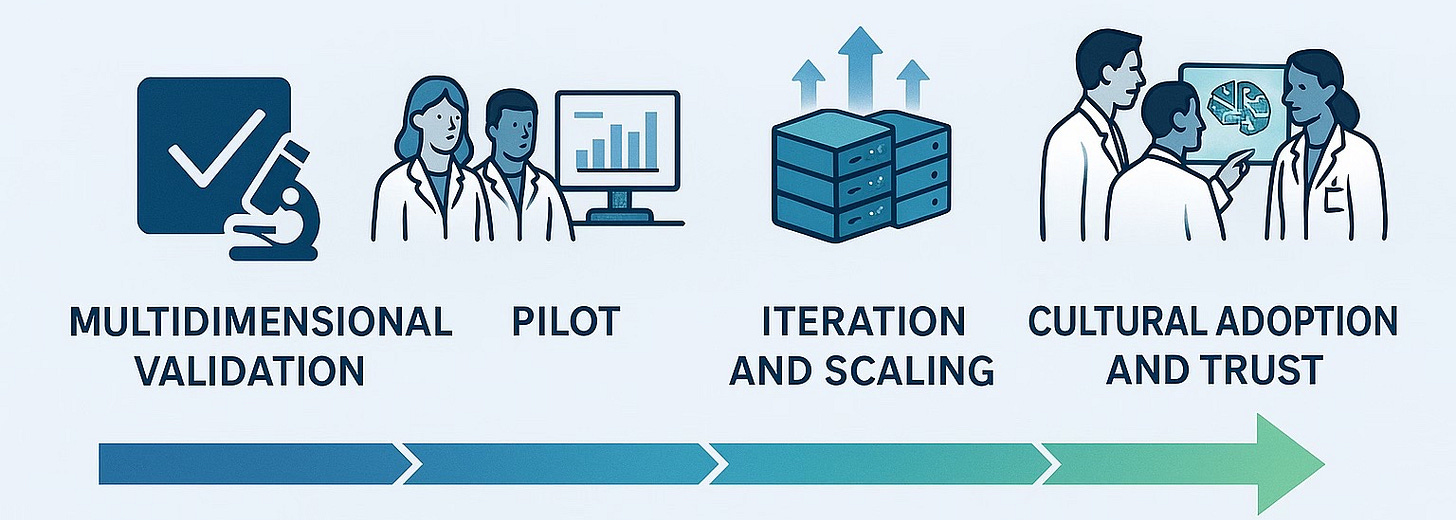

Gradual Deployment: Pilots, Iterative Learning, and Smart Scaling

Moving from a validated AI model to daily use in a hospital isn’t about “switching on software.” It’s, in many ways, introducing a new way of working—one that affects pathologists, lab technicians, IT teams, and administrators alike.

The AI might be ready, but if it’s deployed without adaptation, the department becomes disorganized, staff lose trust, and the project risks stalling indefinitely.

The key isn’t to deploy fast. It’s to deploy well—learning at every step.

1. Purposeful Pilots (More Than a Marketing Demo)

A pilot isn’t just a technical test or a presentation-friendly demo. It’s the learning lab where the hospital figures out how to coexist with AI.

For it to work, it must answer clear questions:

What real problem are we solving? (e.g., cutting prostate biopsy turnaround time by 30%).

How will we measure impact? (Turnaround time, diagnostic variability, accuracy).

Who will be involved, and what training do they need beforehand?

How will we collect staff feedback? (Interviews, surveys, quick review sessions).

A successful pilot isn’t the one where “everything works perfectly,” but the one that uncovers friction points, fixes them, and builds trust before the next step.

2. Iteration: Treat Every Phase as an Opportunity

After the pilot, learning is just as important as the results.

Questions that must be addressed:

Did it integrate smoothly with LIS/PACS?

Do images load fast enough, or are there latency issues?

Do pathologists understand and trust what the model shows?

Are there redundant steps introduced by the tool that can be eliminated?

Iteration isn’t a delay; it’s the insurance policy that prevents a collapse when the project scales.

3. Scaling Without Undermining Trust

Once the pilot is optimized, scaling should be progressive, not aggressive:

Expand first to areas with similar workflows (if it worked in prostate, move next to colon or breast).

Ensure infrastructure (servers, storage, IT support) can grow alongside demand.

Communicate upcoming changes clearly so staff understand how it impacts their work.

Maintain a dedicated support team available to resolve issues and questions in real time.

Scaling too quickly can overload systems, frustrate teams, and turn a promising tool into a burden.

Remember

Bringing AI into hospital workflows isn’t about speed—it’s about trust.

When the clinical team feels the tool is helping (not hindering) their work, and when the infrastructure can handle growth, AI stops being “the new thing” and becomes a natural, reliable part of the workflow.

Because in a hospital, true innovation isn’t measured by how quickly it’s adopted, but by how deeply it improves clinical practice.

Change Management: Clinical Acceptance, Explainability, and Continuous Training

In many hospital AI projects, the technical side moves quickly: the models work, the servers hold, the data flows.

The real challenge is rarely technological. It’s human—getting the clinical team to adopt the tool, trust it, and integrate it into their daily practice.

An algorithm can be trained in months. Earning a pathologist’s trust can take far longer… if done poorly.

1. Clinical Acceptance Starts Before Installation

One of the biggest mistakes is introducing the tool at the very end, as if it were a surprise. This almost guarantees resistance:

“Another system making my job harder.”

“Is this here to replace me?”

“Why should I trust something I wasn’t involved in building?”

The key is to involve the team from the start:

Engage pathologists and technicians in identifying which real problems the AI should solve.

Include them in the validation phase so they see its direct impact.

Publicly acknowledge their role as experts who help “train and oversee” the tool, rather than being replaced by it.

2. Explainability as a Bridge to Trust

A model that delivers results without showing how it reached them will always raise doubts, even if it’s accurate.

Healthcare AI can’t be a “black box”:

It should provide clear visualizations (e.g., heatmaps, highlighted regions) to help interpret its decisions.

It must allow the pathologist to correct or confirm outputs (human-in-the-loop).

Ideally, it should present interpretable metrics (probabilities, confidence intervals) alongside its predictions.

When a tool explains itself, the clinical team is far more likely to view it as an ally, not a threat.

3. Continuous Training: Keeping AI From Collecting Dust

Many projects fail not because the tool is ineffective, but because the team doesn’t receive sufficient training.

One-time training isn’t enough: there must be short, recurring sessions, especially when there are updates or workflow changes.

Establish internal ambassadors (motivated pathologists or lab techs) who can help peers in daily use.

Host sessions where staff can share feedback on issues or improvements, increasing ownership and reducing resistance.

True adoption isn’t achieved through strong technology alone, but through empowered people who understand and trust the tool.

Remember

AI in pathology doesn’t replace the pathologist. It requires the pathologist to work—and to gain credibility.

Without acceptance, explainability, and training, even the best tool will end up forgotten on a server.

Digital transformation in healthcare isn’t just a software shift.

It’s a cultural shift built on transparency, education, and continuous collaboration.

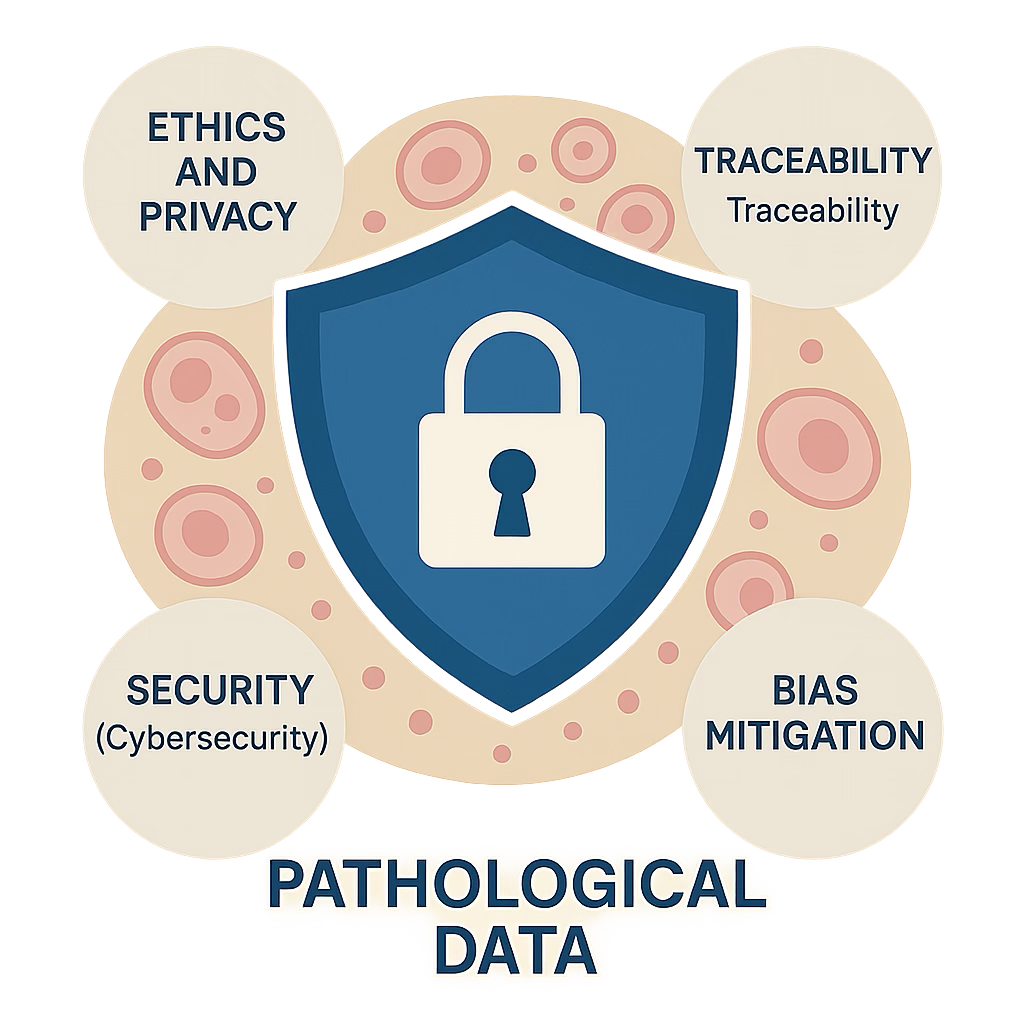

Data Governance: Ethics, Traceability, Security, and Bias

In digital pathology, data is the beating heart of the entire system—from WSI files to annotations, validation metrics, and the clinical reports that AI models help generate.

And like any heart, if its flow isn’t carefully controlled, the entire system is at risk.

Hospital AI isn’t measured only by accuracy. It’s also measured by how information is managed and protected at every step.

A model can deliver a correct diagnosis, but if it compromises security, privacy, or introduces bias, the institutional damage can outweigh any benefit.

1. Ethics and Privacy: More Than a Legal Checkbox

Complying with regulations like GDPR or HIPAA is non-negotiable, but it’s also not enough.

True ethical governance means:

Consent and anonymization wherever possible.

Clarity on secondary data use (e.g., using historical data to train models).

Transparency about AI’s role and limits so it’s never mistaken as a substitute for clinical judgment.

When patients and professionals understand how and why their data is used, institutional trust grows stronger.

2. Traceability: Always Knowing a Data Point’s Origin

Digital pathology data often undergoes multiple transformations: scanning, processing, annotation, storage, analysis.

If traceability is lost at any stage:

Clinical results can’t be reliably validated.

Findings can’t be reproduced or audited.

The institution is exposed to regulatory or legal risk.

Every image and every AI output must carry an auditable trail—who generated it, when, how, and under what parameters.

3. Security: Protecting More Than Just Images

A single breach of servers holding histological data can jeopardize thousands of studies, compromise patient privacy, and halt any digital initiative for months.

Minimum best practices include:

Encryption at rest and in transit.

Segmented access with multi-factor authentication.

Automated backups and routine disaster recovery testing.

Clear incident response protocols for cybersecurity threats.

An AI model is worthless if the hospital can’t guarantee the security of the ecosystem it runs on.

4. Bias and Fairness: The Silent Threat

Models trained on poorly representative datasets can:

Over- or under-diagnose specific populations (e.g., racial or gender variations).

Fail to generalize across hospitals with different protocols.

Perpetuate inequities if their outputs aren’t critically monitored.

Mitigating bias requires:

Diversifying training and validation datasets.

Monitoring model performance across subpopulations and adjusting as needed.

Transparent communication about a model’s limitations.

Remember

Data governance isn’t just a technical or legal requirement. It’s the foundation of trust for any hospital AI project.

When data is well-managed, secure, and auditable, technology earns legitimacy.

When it’s not, even the best model is seen as a liability rather than a solution.

AI in pathology can’t just be accurate.

It must be responsible, traceable, and secure to be truly useful.

From Model to Trust: Building Real and Sustainable Adoption

Validating a model, deploying it gradually, and integrating it into hospital workflows are essential steps. But long-term adoption—where the team naturally uses and trusts the tool day to day—depends on something deeper: a mindset shift.

Traditional clinical environments are built around fixed protocols, minimal error tolerance, and stable processes.

The digital world, by contrast, thrives on:

Rapid iteration and “live” pilots that evolve over time.

Trial and error as part of learning, not a sign of failure.

Ongoing training and adaptation, because tools evolve as fast as the challenges.

1. Training Beyond the Technical

Teaching staff how to use a digital viewer or interpret an algorithmic result isn’t enough.

Training must also cover:

How to handle the uncertainty of early versions.

How to provide useful feedback to improve the system.

How to identify opportunities for improvement during each iteration.

This empowers teams to become active drivers of change, not passive users.

2. Building a Culture of Testing and Continuous Improvement

Hospitals that successfully integrate AI share one trait: they’ve cultivated a collective digital mindset.

They view every pilot as a learning and refinement space, not as a disruption to routine.

They see clinical team feedback as vital, not optional, to implementation.

They create safe environments for staff to experiment without fear of “getting it wrong.”

3. Planting the Cultural Shift

This mindset shift doesn’t just happen.

It requires:

Leaders who communicate clearly and give purpose to the change.

Frequent, practical training, shorter and more focused than traditional sessions.

Internal mentors or “digital ambassadors” who guide teams and bridge the gap with developers or consultants.

In upcoming posts, we’ll explore how hospitals and labs can build this digital culture in a structured way.

Because AI doesn’t transform a department through accuracy alone.

It transforms it when people learn to work with it, adapt as it evolves, and trust its potential.

Closing the Chapter – From Planning to Practice

In this second part of our guide, we’ve moved beyond the foundations and into the messy, human side of AI adoption.

We’ve seen that success in real-world settings isn’t just about models and servers.

It’s about validation across every dimension, careful deployment, a team that feels empowered, and a culture willing to evolve.

Because AI can be technically flawless and still fail if trust isn’t built.

And in hospitals, trust is what makes technology more than a tool—it makes it part of the practice.

Next Week: Part 3 – The Real Payoff

📅 In the final part of this series, we’ll tackle the question every decision-maker asks:

“Is it worth it?”

Part 3 – “The Real Payoff: Clinical, Operational, and Economic Impact of AI in Pathology.”

We’ll break down:

How AI can improve diagnostic precision and patient outcomes.

Operational benefits like reduced turnaround times and lower burnout.

Economic value: cost savings, new revenue streams, and telepathology opportunities.

Case studies showing what real ROI looks like.

Because after building the groundwork and navigating adoption, it’s time to see what AI can truly deliver for hospitals, labs, and patients.