How close are we to true multimodality in Digital Pathology?

Tracing the path from digital slides to integrated intelligence and what it means for the future of precision medicine.

Digital pathology (DP) represents a fundamental transformation of medical diagnosis, enabled by Whole Slide Imaging(WSI). By digitizing entire tissue samples into high-resolution images, WSI not only allows remote collaboration and standardized workflows, but “crucially” lays the foundation for artificial intelligence (AI) integration(1).

AI systems in DP can analyze histological slides with greater consistency and speed than manual methods, identifying anomalies such as cancer cells or inflammatory processes. In prostate or gastrointestinal pathology, for example, AI models have already demonstrated high accuracy, reducing workload and improving diagnostic precision(2).

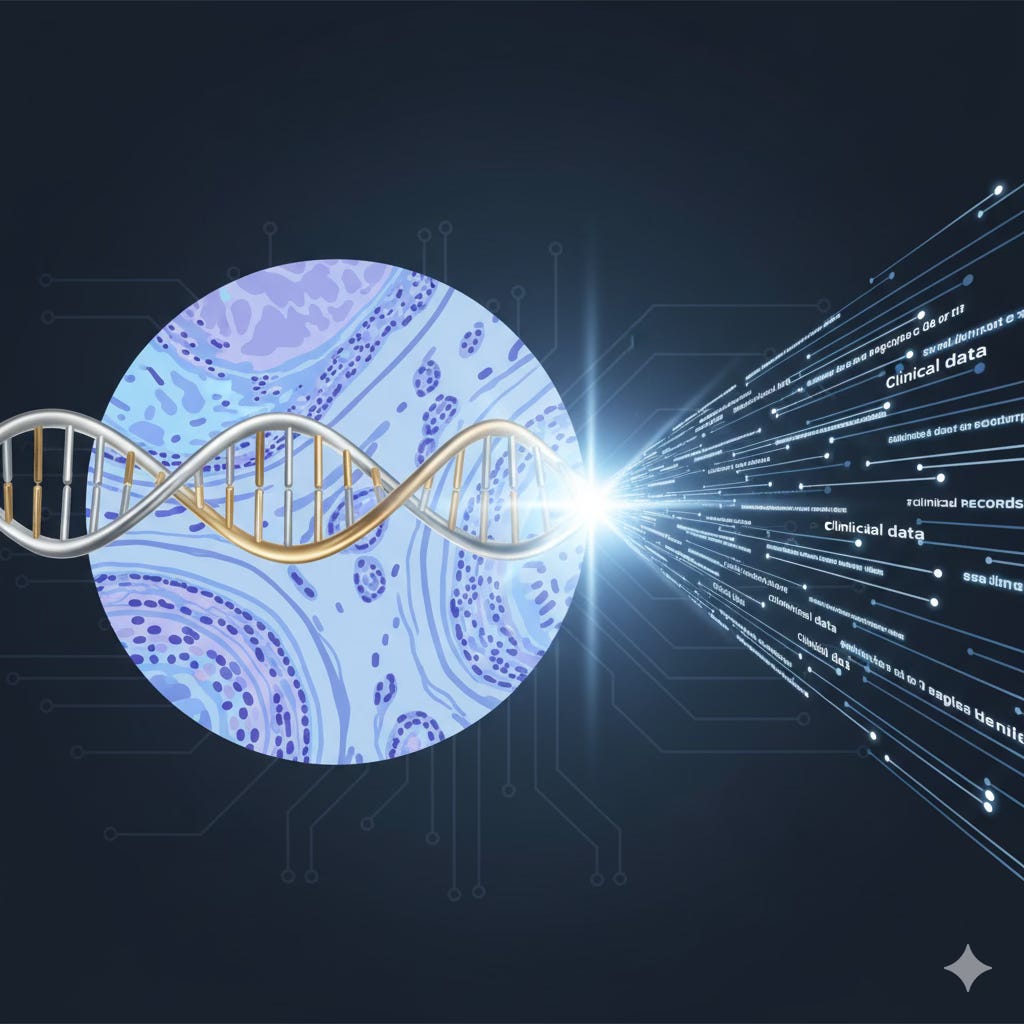

However, in oncology, diagnosis, prognosis, and therapeutic response prediction have traditionally relied on two distinct sources of information often analyzed in isolation: the detailed morphological data from histology slides and the molecular profiles derived from genomic data(3).

The need for multimodality arises from recognizing that most current deep learning paradigms exploit either histology or genomics alone, without intuitively combining their complementary information. Multimodal AI seeks to merge these heterogeneous data sources to generate a more complete and robust picture of disease biology(3).

Core Dimensions of Multimodality in Pathology

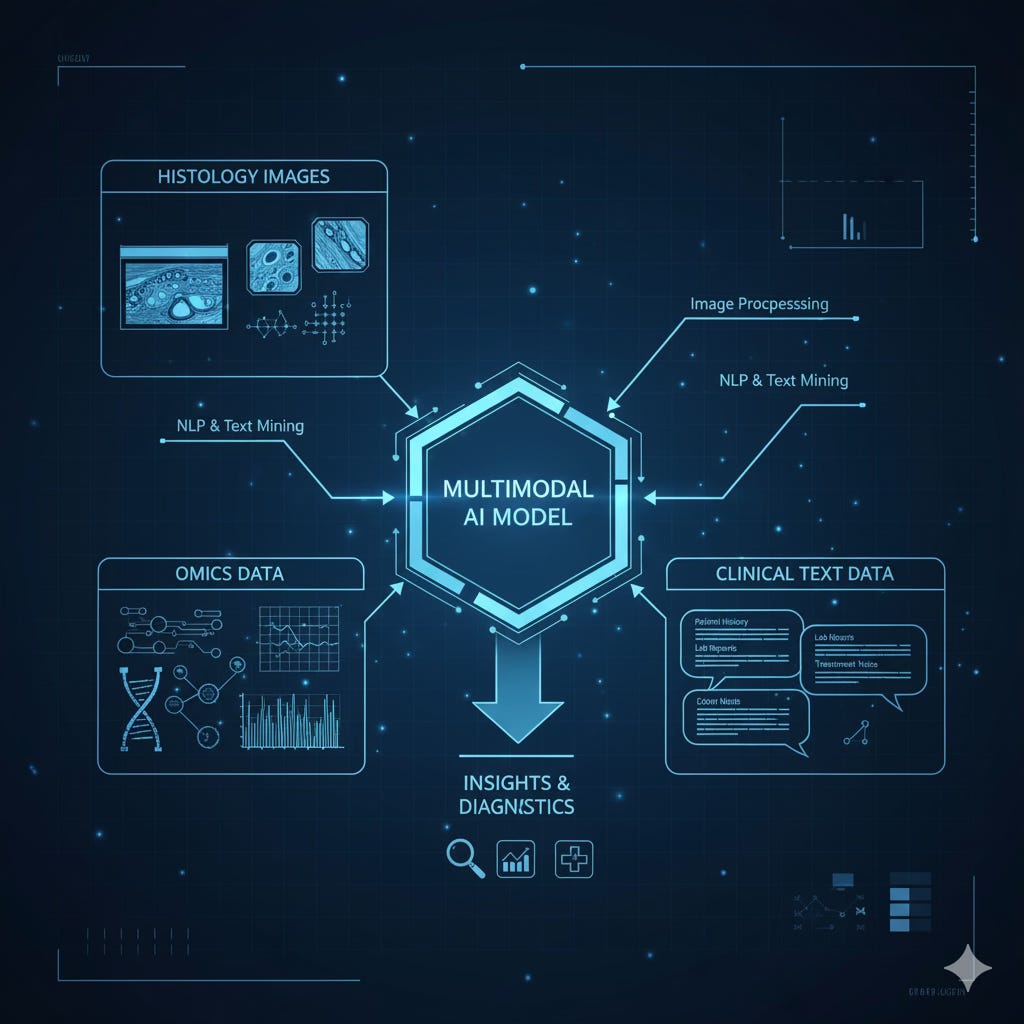

Multimodality in digital pathology is defined by the synergistic integration of at least three primary data dimensions:

Histopathology: Whole Slide Images (WSIs), typically stained with Hematoxylin and Eosin (H&E), capturing tissue morphology and the tumor microenvironment(3).

Molecular (Omic) Data: Genomic (mutations, copy number variations), epigenomic (DNA methylation), transcriptomic (RNA-Seq), and proteomic profiles that characterize the tumor’s biological activity.

Clinical and Textual Data: Structured and unstructured information from Electronic Health Records (EHRs), including pathology reports, radiology studies, and clinical notes that provide patient context(4).

Together, these layers form the foundation of multimodal pathology, where images, molecules, and medical narratives converge to inform a unified, data-driven diagnosis.

Foundations of Digital Pathology and Multimodal Data Resources

The WSI Ecosystem: The Foundation of AI in Pathology

Whole Slide Imaging (WSI) stands as the cornerstone that enables advanced analytics and AI integration into diagnostic workflows and precision medicine(1).

Despite its intrinsic advantages, early adoption of WSI faced significant hurdles: infrastructure limitations, regulatory barriers, and the urgent need to integrate with cloud-based platforms and Big Data analytics systems(1).

The digital transformation of pathology therefore depends on overcoming these barriers so that computational pathology can reach its full potential, not only as a digitization process, but as an intelligence layer capable of learning from every pixel and every patient.

Curating Multimodal Resources: TCGA and CPTAC

The development of multimodal AI is intrinsically tied to the availability of large, paired datasets.

The Cancer Genome Atlas (TCGA) remains one of the most pivotal resources, having profiled 33 tumor types at the genomic, transcriptomic, and proteomic levels(5).

Its true value for multimodality lies in the extensive archive of digital H&E slides paired with molecular and clinical data(6).

Initially, these slides were used for diagnostic verification, but their latent potential lies in enabling machine learning to extract histological features and correlate them with molecular and clinical outcomes (6).

Complementing TCGA, the Clinical Proteomic Tumor Analysis Consortium (CPTAC) provides detailed proteogenomic data across multiple cancers (7).

Standardized benchmarking datasets, such as those from CAMELYON (for lymph node metastasis in breast cancer) or PANDA (for Gleason grading in prostate cancer) are vital to ensure reproducibility and rigorous validation of computational pathology methods(8).

The scarcity of large, well-annotated multimodal cohorts underscores why the creation of centralized and standardized repositories has become a strategic imperative. In Europe, the movement toward large-scale, harmonized data infrastructures marks a turning point: from fragmented research silos to a truly continental network for multimodal discovery.

Large-Scale Infrastructure Initiatives

Major infrastructure initiatives aim to address the challenges of scale, legality, and governance inherent to multimodal AI.

The BIGPICTURE project, funded by the EU’s Innovative Medicines Initiative (IMI2), is pioneering a centralized, ethically compliant infrastructure capable of storing, sharing, and processing millions of digital slides. Its goal is ambitious: to collect and manage three million WSI files and their associated clinical metadata for the development of AI tools (9).

This scale illustrates the sheer complexity of storing and processing gigapixel images and explains why early AI systems focused on small Regions of Interest (ROIs).

Transitioning toward whole-slide-level models has now become essential to capture global pathological context and effectively integrate molecular or clinical data.

Beyond technical infrastructure, BIGPICTURE proactively addresses legal and ethical requirements, ensuring patient data privacy before any R&D use(9).

Its emphasis on data governance and systematic benchmarking, evaluating AI tools against independent reference datasets, is critical for building clinical trust in AI-powered pathology (10).

AI Models for Modality Fusion: Architectures and Strategies

Research efforts in computational pathology have increasingly focused on developing architectures capable of effectively integrating the morphological information contained in WSIs with molecular profiles — a key step toward overcoming the limitations of single-modality analysis.

Deep Feature Fusion

A persistent challenge in predictive oncology is that most deep-learning paradigms treat histology and genomics independently, failing to exploit their complementary nature (3). To bridge this gap, several architectures have been proposed that directly fuse representations from both domains.

Pathomic Fusion is one of the most interpretable and influential frameworks for end-to-end feature fusion between histological images and genomic data (mutations, copy-number variations, RNA-Seq)(3). Its core mechanism models pairwise feature interactions across modalities using a Kronecker product, enhanced with a gated attention mechanism that adaptively modulates the contribution of each modality.

Validated on TCGA datasets for glioma and clear-cell renal carcinoma, Pathomic Fusion consistently improved prognostic prediction compared to unimodal models.

Crucially, its interpretable design allows spatial and molecular feature attribution revealing why a given multimodal prediction was made and which regions or genes were most influential. This interpretability is one of the main reasons Pathomic Fusion is often cited as a foundational reference in multimodal computational pathology.

Self-Supervised and Contrastive Representation Alignment

A more recent trend leverages Self-Supervised Learning (SSL) and contrastive alignment to match latent representations from different modalities, without requiring direct diagnostic labels. This enables models to learn the molecular fingerprints embedded in tissue morphology.

CLOVER (Contrastive Learning for Omics-Guided Whole-Slide Visual Embedding Representation) exemplifies this approach, it employs a symmetric cross-modal contrastive loss to maximize similarity between slide embeddings and their corresponding multi-omic embeddings (mRNA, methylation, CNV), while minimizing similarity with mismatched pairs (11).

The success of CLOVER demonstrates that H&E morphology contains deep molecular signals that AI can decode, effectively allowing a single slide to serve as a proxy for slower, more expensive molecular assays.

CLOVER excels in few-shot learning scenarios for cancer subtyping and biomarker prediction (ER, PR, HER2), and uses integrated gradients to highlight the molecular features driving alignment (11). This not only boosts performance but also offers insights into the molecular basis of visible histological patterns, a step toward interpretable biology.

Pushing the frontier further, SPADE (Spatial Transcriptomics and Pathology Alignment) addresses the spatial dimension of multimodality by integrating WSIs with spatial transcriptomics (ST) data(12). ST captures the molecular heterogeneity of tissue in situ, information often lost in traditional H&E slides. SPADE uses a Mixture-of-Data Experts architecture combined with contrastive learning to align co-registered WSI patches with their gene-expression profiles, creating a joint latent space informed by spatial biology (12).

This alignment significantly improves few-shot performance across multiple downstream tasks, illustrating the power of integrating morphology and spatial molecular information within a unified multimodal representation.

Foundation Models and the Future of Generative AI in Pathology

The emergence of foundation models (FMs) has expanded the capabilities of AI in pathology beyond narrow, task-specific systems toward generalist, reasoning-based architectures.

These models, pre-trained on vast and diverse datasets, are transforming how information is learned, represented, and transferred across medical domains.

The Evolution Toward Generalist Multimodal Models

Foundation models, by being exposed to massive amounts of heterogeneous data, can capture universal representations that generalize across multiple tasks, from rare disease detection to prognostic prediction and biomarker discovery(13).

BiomedCLIP, for example, is a large-scale multimodal biomedical foundation model trained on over 15 million scientific image–text pairs(14). This diversity enables robust cross-domain transfer learning: BiomedCLIP has even outperformed specialized models in radiology tasks, underscoring the power of multimodal pre-training. Its capacity to unify visual and textual representations exemplifies how broad, domain-informed pre-training can yield models with transferable intelligence applicable to pathology, radiology, and molecular medicine alike(14).

Vision–Language Alignment and Clinical Context

The multimodality of foundation models now extends beyond omic integration — encompassing clinical narrativescontained in pathology reports and Electronic Health Records (EHRs) (4).

A prime example is TITAN, a whole-slide-level foundation model pre-trained using 335,000 WSIs through self-supervised visual learning, combined with vision–language (V-L) alignment on 183,000 paired slides and pathology reports(15).

This three-stage pre-training pipeline aligns local Regions of Interest (ROI) and full-slide representations with corresponding text reports, effectively injecting clinical reasoning into the model’s latent space (15).

By aligning images with expert-written pathology reports, TITAN learns to emulate the diagnostic reasoning process. It can generate pathology-like summaries, retrieve similar cases, and generalize to low-resource scenarios such as rare diseases(15), all without requiring task-specific fine-tuning. This represents a paradigm shift: from AI detecting patterns to AI reasoning across modalities.

Building upon this concept, a new wave of vision–language large models (V-LLMs) such as PathChat (16) and CONCH (17) is emerging. These systems, often fine-tuned through instruction tuning, can handle gigapixel WSIs (as in SlideChat or WSI-LLaVA) and function as diagnostic copilots or agents in automated workflows. They embody a qualitative leap from object detection toward autonomous reasoning and interactive assistance in digital pathology.

Ultimately, the performance and robustness of these models, particularly in data-scarce settings, highlight generalization as the key metric for clinical utility.

In this sense, multimodal foundation models are not merely tools for classification; they are the early scaffolds of agentic AI in pathology, systems that reason, contextualize, and explain.

Standardization, Interoperability, and Infrastructure Challenges

The clinical deployment of multimodal AI in pathology depends critically on a data infrastructure capable of supporting the integration of inherently heterogeneous data sources. Without standardized frameworks and interoperable systems, multimodality remains a research concept rather than a clinical reality .

The Challenge of Interoperability and Data Silos

Digital pathology today remains fragmented by deep interoperability barriers disconnected systems, proprietary data formats, and the absence of universal standards(18). Most healthcare data exist in isolated silos: WSIs, radiology images, and textual reports reside in separate management systems, often without shared identifiers.

This lack of cross-referencing prevents the direct correlation of data that is essential for training multimodal AI models(4). True multimodality requires linking morphology, molecular data, and clinical context within a unified ecosystem, one where each pixel, gene, and patient record can be meaningfully connected.

The Central Role of DICOM in Digital Pathology

The key to imaging interoperability in medicine lies in the DICOM (Digital Imaging and Communications in Medicine) standard, the globally accepted protocol for storing and exchanging medical images(19).

Its extension to DICOM-WSI is crucial for digital pathology, enabling the efficient storage of gigapixel slides along with their metadata. Through DICOM, WSIs can be directly referenced from Electronic Health Records (via FHIR standards) and accessed efficiently through vendor-neutral APIs(18).

This convergence allows histological data to exist within the same ecosystem as radiology and genomics, a necessary condition for multimodal clinical research and AI integration. In short, DICOM is not just a storage format; it is the lingua franca of medical imaging that underpins the multimodal future of pathology.

Harmonizing Clinical and Imaging Data

To conduct multimodal research at scale, imaging standards must be harmonized with clinical data models. A major breakthrough has been the incorporation of DICOM within the OMOP (Observational Medical Outcomes Partnership) Common Data Model an initiative that standardizes and represents imaging studies alongside rich observational data (20).

This integration adds more than 5,000 DICOM attributes to the OMOP vocabulary (20), allowing the precise description of imaging parameters and their linkage with clinical phenotypes. Such harmonization effectively bridges the historical gap between image-based and patient-based data, creating a unified framework for multimodal analytics(20).

Importantly, this alignment ensures data consistency and quality, essential prerequisites for training AI models(18) that are both reliable and reproducible across institutions.

Privacy and Decentralized Collaboration

Concerns over patient privacy and data governance have driven the rise of decentralized architectures such as Federated Learning (FL). FL enables the collaborative training of global AI models across multiple institutions without ever centralizing raw data; only model updates are shared (21).

Yet, multimodal FL in healthcare faces unique challenges: data modalities are often missing or unevenly distributed across sites due to variations in clinical practice, cost, or technology access. Emerging approaches now use low-dimensional feature translators to reconstruct missing modalities, ensuring model robustness despite heterogeneity.

The momentum around FL, coupled with governance frameworks like BIGPICTURE, which embed legal and ethical compliance by design, demonstrates that privacy and trust are not obstacles to innovation(9), but structural forces shaping the architecture of multimodal AI systems.

Clinical Translation, Validation, and Ethical–Regulatory Considerations

The introduction of multimodal AI into clinical practice demands more than technological excellence, it requires proof of clinical utility, trustworthiness, and regulatory alignment. Bringing these systems from research to the diagnostic bench involves a coordinated effort across validation, workflow integration, and ethical oversight.

Clinical Validation and Utility Assessment

Any new technology in healthcare must justify its relevance and impact on patient care, laboratory operations, and diagnostic accuracy. Digitalization is a necessary step toward enabling AI (22), but validation cannot occur in isolation.

AI-enhanced workflows, which often combine multiple AI tools operating in tandem , must be validated holistically, just like the individual algorithms they integrate (22).

Effective adoption depends on seamless operational integration with existing systems such as Laboratory Information Systems (LIS) and imaging archives (PACS/VNA), both of which must evolve to handle multimodal data natively(19).

In practice, validation is not only a technical process but also an exercise in workflow redesign ensuring that AI augments, rather than disrupts, clinical reasoning.

Clinical Adoption Models and Commercialization

Large-scale adoption is being accelerated through strategic collaborations between AI leaders and reference oncology centers. Notable examples include the partnership between PathAI and Moffitt Cancer Center (23), and the alliance between Roche Tissue Diagnostics and PathAI for the development of AI-powered Companion Diagnostics (CDx) (24).

These initiatives exemplify a commercial model where clear return on investment (ROI) and demonstrable medical value drive digital transformation.

By embedding AI into the selection of patients for targeted therapies, such as immuno-oncology (IO) and antibody–drug conjugates (ADCs) (24), multimodal AI proves not only useful but indispensable.

Roche’s Open Environment strategy, integrating more than 20 third-party AI algorithms (including Deep Bio, Lunit, and Mindpeak) through its Navify® platform, further illustrates how interoperability and open ecosystems can catalyze precision medicine at scale(25).

Ethical Considerations: Bias and Explainability

The proliferation of AI systems in healthcare particularly multimodal and high-stakes ones raises pressing ethical concerns about fairness and accountability.

There is a tangible risk of reinforcing healthcare disparities through algorithmic bias, especially when datasets underrepresent certain populations(26).

Explainable AI (XAI) therefore becomes essential: for a pathologist to trust a multimodal prognostic prediction, they must be able to see how the model weights features from both morphology and molecular data. Architectures such as Pathomic Fusion and CLOVER exemplify this principle, incorporating interpretable mechanisms that localize and quantify feature importance (3).

Building equitable AI requires both bias mitigation through disentanglement and federated approaches and the design of transparent systems that make reasoning visible rather than hidden in a black box.

Global Regulatory Frameworks and Data Governance

Regulatory bodies are rapidly adapting to the complexity of multimodal AI systems.

FDA (U.S. Food and Drug Administration): The agency encourages the safe and effective development of AI-enabled medical devices (27) and recognizes that multimodal foundation models differ fundamentally from static algorithms.

Future regulatory pathways will likely focus on continuous monitoring, adaptive performance evaluation, and bias risk management.

Initiatives like PathologAI reflect the FDA’s commitment to building frameworks for histopathology AI, including preclinical toxicology data (28).EHDS (European Health Data Space): This initiative is establishing a harmonized legal and technical framework for electronic health records, promoting interoperability across the EU(29).

The adoption of standardized data generation for digital pathology within EHDS could become a gold standard for precision medicine in Europe.(30)EMA (European Medicines Agency): The EMA is integrating AI principles into its regulatory oversight to ensure data-driven insight generation and risk management in biomedical innovation(31).

These frameworks signal a paradigm shift: regulators are no longer merely approving devices they are co-designing ecosystems of trust that ensure the safe, equitable, and continuous evolution of multimodal AI in healthcare.

Conclusion and Future Perspectives

Synthesis of Current Capabilities

Multimodal digital pathology has evolved rapidly from early feature-fusion approaches such as Pathomic Fusion, to advanced contrastive alignment models like CLOVER and SPADE, and now toward foundation models capable of reasoning across vision and language (e.g., TITAN, PathChat).

These systems demonstrate the ability to decode deep molecular information from morphology alone, integrating omics, WSI, and textual data to build unified, general-purpose representations.

They no longer merely recognize patterns; they understand relationships across biological and clinical dimensions a milestone that redefines what “intelligence” means in medical AI.

Persistent Barriers and Strategic Challenges

Despite this remarkable progress, widespread clinical implementation of multimodal AI faces several persistent challenges:

Data Governance at Scale: The creation of large, diverse, and ethically compliant datasets (as pursued by BIGPICTURE) remains the primary bottleneck for robust AI training.

Clinical Interoperability: Deployment requires universal adoption of imaging standards (DICOM-WSI) and their integration with clinical data models (OMOP/FHIR).

Regulation and Continuous Validation: Foundation models are inherently adaptive, demanding new regulatory frameworks capable of real-time performance monitoring, bias mitigation, and explainability.

These are not merely technical problems, they are organizational and cultural challenges that will determine the pace and direction of clinical transformation.

Strategic Recommendations

To accelerate the clinical translation of multimodal digital pathology, several key actions are essential:

Prioritize Infrastructure Standardization

Enforce the adoption of universal standards such as DICOM for WSIs and ensure their alignment with OMOP/FHIR-based clinical data frameworks.

This will enable interoperable, large-scale observational research the foundation for trustworthy AI.Build Trust through Explainability and Bias Mitigation

Multimodal foundation models must prioritize transparency by design.

Pathologists should be able to interrogate how the model attributes importance across morphological and molecular features, ensuring equity and interpretability.Adopt Distributed and Privacy-Preserving Architectures

Federated learning must become a default paradigm for training models across protected clinical datasets, reconciling innovation with patient privacy.Focus on Clinical ROI

AI adoption will only scale when it demonstrates tangible medical value and financial sustainability.

Companion diagnostics (CDx) exemplify how high-impact clinical applications can justify digital transformation while improving patient outcomes.

Looking Ahead

Multimodal pathology is more than a technological evolution — it represents a cultural convergence.

It challenges medicine to move beyond silos: to see the patient not as separate layers of data, but as an interconnected biological system that can finally be understood holistically.

The future of pathology will not be defined by pixels, genes, or reports in isolation — but by the meaning extracted from their interaction.

When every image speaks the same language as every gene and every clinical note, we will have achieved something greater than efficiency: we will have redefined diagnosis as an act of integration.

💬 In the end, multimodality is not just about combining data it’s about restoring coherence to medicine itself.

References

Masjoodi S, Anbardar MH, Shokripour M, Omidifar N. Whole Slide Imaging (WSI) in Pathology: Emerging Trends and Future Applications in Clinical Diagnostics, Medical Education, and Pathology. Iran J Pathol. 2025;20(3):257–65.

Parvin N, Joo SW, Jung JH, Mandal T.. Multimodal AI in Biomedicine: Pioneering the Future of Biomaterials, Diagnostics, and Personalized Healthcare. Nanomaterials (Basel). 2025 June 10;15(12):895.

Chen RJ, Lu MY, Wang J, Williamson DFK, Rodig SJ, Lindeman NI, et al. Pathomic Fusion: An Integrated Framework for Fusing Histopathology and Genomic Features for Cancer Diagnosis and Prognosis. IEEE Trans Med Imaging. 2022 Apr;41(4):757–70.

Waqas A, Naveed J, Shahnawaz W, Asghar S, Bui MM, Rasool G. Digital pathology and multimodal learning on oncology data. BJR Artif Intell. 2024 Mar 4;1(1):ubae014.

TCGA - PanCanAtlas Publications | NCI Genomic Data Commons [Internet]. [cited 2025 Oct 10]. Available from: https://gdc.cancer.gov/about-data/publications/pancanatlas

Cooper LA, Demicco EG, Saltz JH, Powell RT, Rao A, Lazar AJ. PanCancer insights from The Cancer Genome Atlas: the pathologist’s perspective. J Pathol. 2018 Apr;244(5):512–24.

Proteomic Data Commons [Internet]. [cited 2025 Oct 10]. Available from: https://proteomic.datacommons.cancer.gov/pdc/cptac-pancancer

Valle D, C A. Leveraging digital pathology and AI to transform clinical diagnosis in developing countries. Front Med [Internet]. 2025 Sept 25 [cited 2025 Oct 10];12. Available from: https://www.frontiersin.org/journals/medicine/articles/10.3389/fmed.2025.1657679/full

Our work | Bigpicture [Internet]. [cited 2025 Oct 10]. Available from: https://bigpicture.eu/our-work

The catalyst in the digital transformation of pathology | Bigpicture [Internet]. [cited 2025 Oct 10]. Available from: https://bigpicture.eu/

Yu S, Kim Y, Kim H, Lee S, Kim K. Contrastive Learning for Omics-guided Whole-slide Visual Embedding Representation [Internet]. bioRxiv; 2025 [cited 2025 Oct 10]. p. 2025.01.12.632280. Available from: https://www.biorxiv.org/content/10.1101/2025.01.12.632280v1

Redekop E, Pleasure M, Wang Z, Flores K, Sisk A, Speier W, et al. SPADE: Spatial Transcriptomics and Pathology Alignment Using a Mixture of Data Experts for an Expressive Latent Space [Internet]. arXiv; 2025 [cited 2025 Oct 10]. Available from: http://arxiv.org/abs/2506.21857

Pathology Foundation Models. JMA J. 2025;8(1):121–30.

Zhang S, Xu Y, Usuyama N, Xu H, Bagga J, Tinn R, et al. BiomedCLIP: a multimodal biomedical foundation model pretrained from fifteen million scientific image-text pairs [Internet]. arXiv; 2025 [cited 2025 Oct 10]. Available from: http://arxiv.org/abs/2303.00915

Ding T, Wagner SJ, Song AH, Chen RJ, Lu MY, Zhang A, et al. Multimodal Whole Slide Foundation Model for Pathology [Internet]. arXiv; 2024 [cited 2025 Oct 10]. Available from: http://arxiv.org/abs/2411.19666

Lu MY, Chen B, Williamson DFK, Chen RJ, Zhao M, Chow AK, et al. A multimodal generative AI copilot for human pathology. Nature. 2024 Oct;634(8033):466–73.

Lu MY, Chen B, Williamson DFK, Chen RJ, Liang I, Ding T, et al. A visual-language foundation model for computational pathology. Nat Med. 2024 Mar;30(3):863–74.

Enabling the future of digital pathology with DICOM at Pathology Visions 2025 [Internet]. [cited 2025 Oct 10]. Available from: https://www.merative.com/blog/enabling-the-future-of-digital-pathology-with-dicom-at-pathology-visions-2025

The nuances of integration and interoperability in digital pathology [Internet]. [cited 2025 Oct 10]. Available from: https://www.usa.philips.com/healthcare/white-paper/digital-pathology-interoperability

Park WY, Sippel Schmidt T, Salvador G, O’Donnell K, Genereaux B, Jeon K, et al. Breaking data silos: incorporating the DICOM imaging standard into the OMOP CDM to enable multimodal research. J Am Med Inform Assoc. 2025 July 18;32(10):1533–41.

Multimodal Federated Learning With Missing Modalities through Feature Imputation Network [Internet]. [cited 2025 Oct 10]. Available from: https://arxiv.org/html/2505.20232v1

Zarella MD, McClintock DS, Batra H, Gullapalli RR, Valante M, Tan VO, et al. Artificial intelligence and digital pathology: clinical promise and deployment considerations. J Med Imaging (Bellingham). 2023 Sept;10(5):051802.

PathAI and Moffitt Cancer Center Announce Strategic Collaboration [Internet]. 2025 [cited 2025 Oct 10]. Available from: https://www.pathai.com/news/pathai-and-moffitt-cancer-center-announce-strategic-collaboration

PathAI Announces Exclusive Collaboration with Roche Tissue Diagnostics to Advance AI-enabled Interpretation for Companion Diagnostics [Internet]. 2024 [cited 2025 Oct 10]. Available from: https://www.pathai.com/resources/pathai-announces-exclusive-collaboration-with-roche-tissue-diagnostics-to-advance-ai-enabled-interpretation-for-companion-diagnostics

Diagnostics [Internet]. [cited 2025 Oct 10]. Roche advances AI-driven cancer diagnostics by expanding its digital pathology open environment. Available from: https://diagnostics.roche.com/global/en/news-listing/2024/roche-advances-ai-driven-cancer-diagnostics-by-expanding-its-digital-pathology-open-environment.html

Chen RJ, Wang JJ, Williamson DFK, Chen TY, Lipkova J, Lu MY, et al. Algorithm fairness in artificial intelligence for medicine and healthcare. Nat Biomed Eng. 2023 June;7(6):719–42.

Health C for D and R. Artificial Intelligence-Enabled Medical Devices. FDA [Internet]. 2025 Oct 7 [cited 2025 Oct 10]; Available from: https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-enabled-medical-devices

Research NC for T. PathologAI Initiative. nctr [Internet]. 2024 Nov 26 [cited 2025 Oct 10]; Available from: https://www.fda.gov/about-fda/nctr-research-focus-areas/pathologai-initiative

European Health Data Space Regulation (EHDS) - Public Health [Internet]. 2025 [cited 2025 Oct 10]. Available from: https://health.ec.europa.eu/ehealth-digital-health-and-care/european-health-data-space-regulation-ehds_en

Krekora-Zając D. Liability for Damages, AI, and Machine Learning for Digital Pathology as New Challenges for Biobanks. In: Artificial Intelligence in Biobanking. Routledge; 2025.

Artificial intelligence | European Medicines Agency (EMA) [Internet]. 2024 [cited 2025 Oct 10]. Available from: https://www.ema.europa.eu/en/about-us/how-we-work/data-regulation-big-data-other-sources/artificial-intelligence