From Performance to Trust: The State of Explainability in Digital Pathology

Exploring the fine line between visual interpretation and real understanding in the new era of computational pathology.

Introduction and conceptual framework of explainability in Digital Pathology

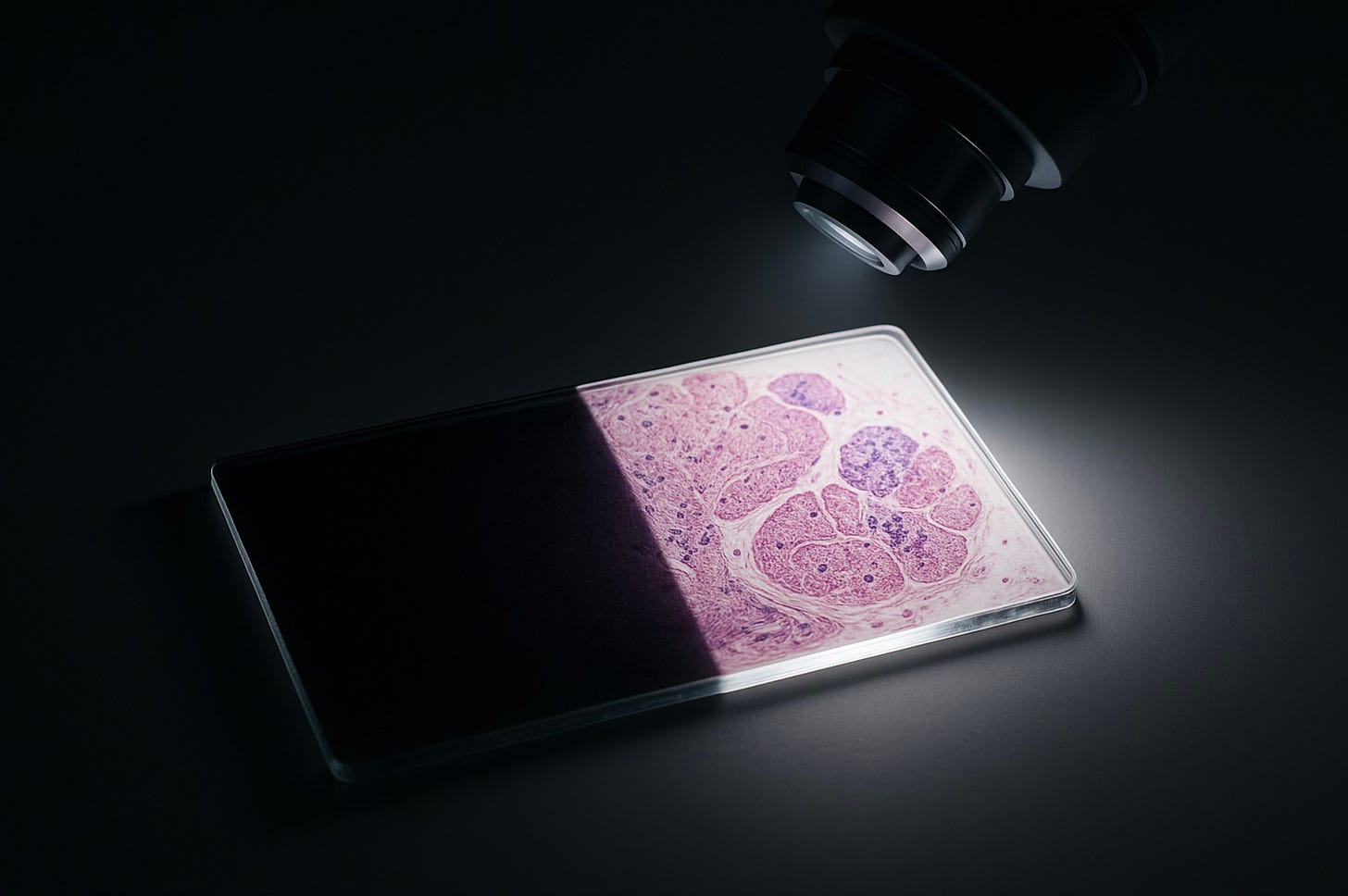

Digital pathology, powered by whole-slide imaging (WSI), has reshaped the landscape of histopathology. It has given rise to a new generation of AI systems capable of assisting in diagnosis, quantifying morphology, and identifying regions of diagnostic relevance.

Yet despite these breakthroughs, a silent problem persists, one that every pathologist who has looked at a saliency map or a Grad-CAM overlay has felt in their gut: we see the result, but not the reasoning behind it.

Artificial intelligence in pathology remains, in many ways, a black box. And in medicine, opacity is not a neutral inconvenience, it’s a clinical and ethical risk.

Explainable AI (XAI) has therefore evolved from being a research goal to becoming a regulatory and safety imperative. In oncology especially, where our decisions shape a patient’s trajectory of treatment, understanding why an algorithm reaches a certain conclusion is as important as what it concludes.

Defining explainability and the challenge of clinical trust

Before diving into the technicalities, it’s crucial to differentiate the three pillars that support clinical trust in AI systems:

Explainability refers to the techniques used to justify a prediction, how the model arrives at a given output.

Interpretability concerns the human capacity (in this case, the pathologist’s) to understand the link between input and output.

Transparency goes a step further, encompassing the entire lifecycle documentation of the system (from data provenance to limitations) now a formal requirement from regulatory bodies like the FDA(1).

In digital pathology, where a single misclassified lesion can redefine a patient’s prognosis, blind trust is unacceptable. A “black box” that offers confident predictions without clear reasoning is a safety hazard.

Thus, XAI isn’t a luxury, it’s a risk-mitigation tool that allows the pathologist to verify the model’s logic before making a critical diagnostic decision.

Clinically, explainability must answer three fundamental questions that every pathologist would ask of a human colleague:

Where is the pathology? (localization)

Why did the model reach this grade or classification? (feature-based justification)

What would change if a conceptual feature were different? (counterfactual reasoning)

If the AI system cannot answer these, it’s not truly explainable, no matter how impressive its accuracy metric may appear.

The gigapixel challenge and the fragmentation of the Black Box

The opacity of deep learning models in pathology isn’t just a philosophical issue; it’s structural.

Whole-slide images are gigapixel-scale (billions of pixels) far beyond what conventional end-to-end neural networks can process efficiently (2). To manage this, most architectures rely on a “divide and conquer” approach: breaking the slide into smaller tiles, embedding local features, and aggregating them into a global prediction (2).

This patch-based approach, while computationally practical, fractures the biological context.

It slices through natural tissue boundaries and spatial relationships, forcing the model to interpret the tumor microenvironment as disconnected pieces rather than an integrated biological entity (3).

In doing so, the model loses something fundamental: the continuity of structure and meaning that human pathologists rely on.

When a model aggregates predictions from thousands of isolated patches, it produces an output that may be statistically sound but biologically disoriented. This is where explainability collapses, because it’s nearly impossible to attribute the final decision to meaningful, localized morphological features.

To make AI truly explainable in pathology, we must therefore address not only the interpretability of the output but the representation of the data itself (3).

Critical evaluation of Post-Hoc methods (feature attribution)

Even though the pathology community has embraced visualization tools like saliency maps and Grad-CAM as windows into the model’s “thinking,” these methods remain fragile instruments, beautiful, seductive, and often misleading.

Post-hoc explainability tries to justify a decision after it has already been made.

In clinical terms, it’s like asking a trainee to rationalize a diagnosis they didn’t truly understand, and while that might look convincing on paper, it hides the uncomfortable truth: most post-hoc explanations tell us what the model focused on, but not why.

Common methods and their mechanisms

Among the most widely used post-hoc techniques are saliency maps and Gradient-weighted Class Activation Mapping (Grad-CAM).

These methods highlight the regions of an input image that contribute most strongly to the model’s prediction, offering a quick visual “justification”(4).

Over the years, many variations have emerged : Grad-CAM++, ScoreCAM, SmoothGrad, Integrated Gradients, all aiming to improve stability and spatial precision by manipulating gradients or integrating over input paths(4).

Other methods, such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations), use perturbation strategies: they slightly alter input regions to estimate how each area influences the prediction.

While effective in lower-dimensional data like tabular or radiological settings, their application to gigapixel WSIs is computationally enormous and conceptually problematic.

Each tile of a WSI contains only a fraction of the story.

So when the system highlights one tile and not another, we might be seeing not pathology, but noise a microscopic echo of an algorithm trying to “explain” without truly “understanding.”

At first glance, these maps give comfort, they look familiar. But familiarity is not fidelity.