Federated Learning in Digital Pathology: Clinical, Technical, and Regulatory Applications

Federated Learning (FL) marks a fundamental shift in how Artificial Intelligence (AI) can be applied to healthcare. It enables the collaborative training of machine learning (ML) models on decentralized datasets, without the need to transfer any sensitive patient information.

In digital pathology, where whole-slide images (WSI) reach gigapixel resolution and contain highly sensitive diagnostic data, FL directly addresses two of the most pressing challenges in the field: data privacy and data accessibility. Between 2022 and 2025, it has proven to be a driving force for research and clinical applications alike, offering a viable solution in environments bound by stringent privacy regulations such as GDPR and HIPAA.

By allowing models to be trained on diverse, multi-institutional datasets without raw data ever leaving its source, FL builds robust, generalizable AI systems, a necessity in rare diseases and across the inherent variability of pathological datasets. Its natural alignment with privacy regulations positions it not as an experimental trend, but as a foundational technology for responsible and scalable innovation in digital health.

1. Introduction to Federated Learning in Digital Pathology

1.1 Definition and Core Principles

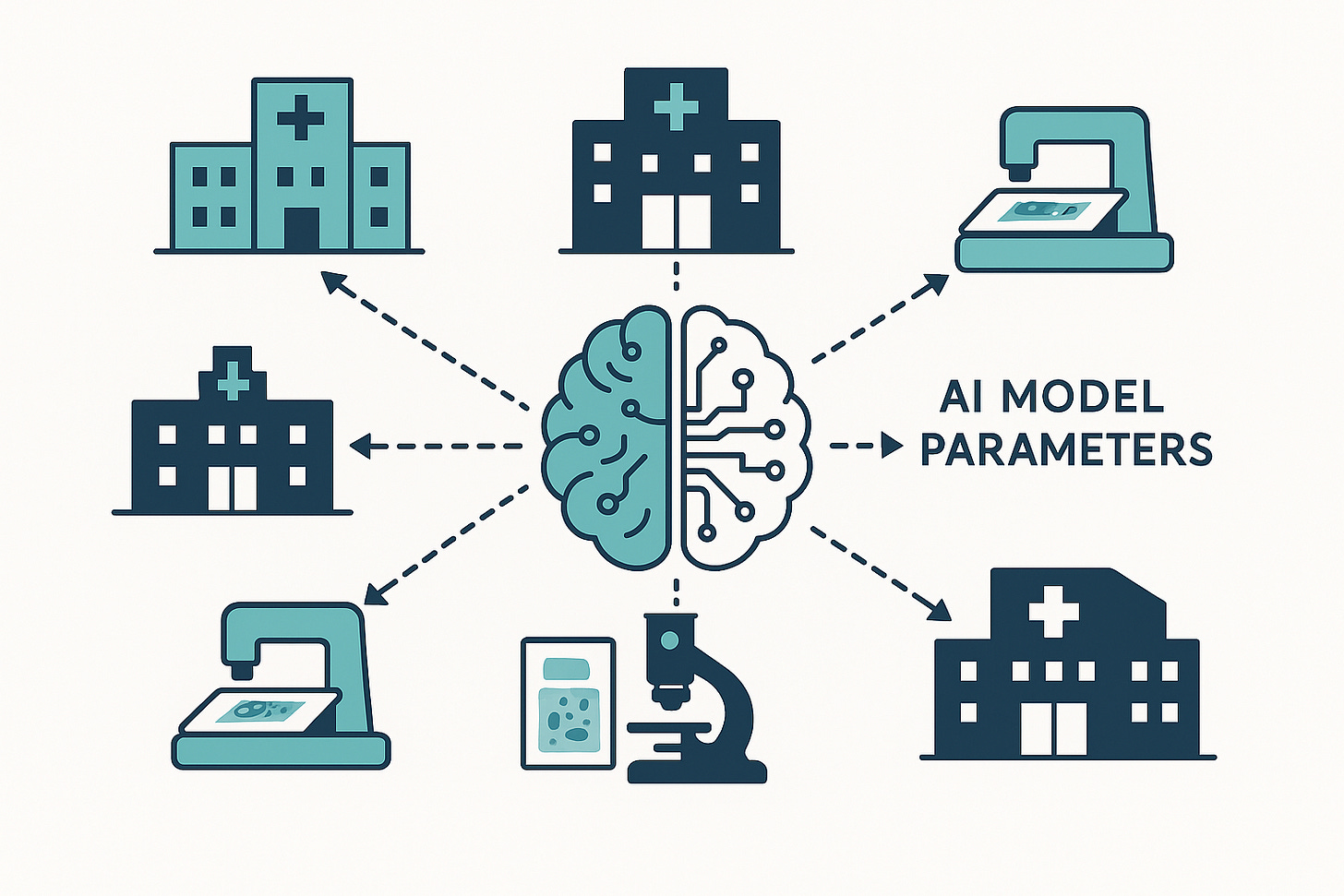

Federated Learning is a decentralized approach to training ML models. Unlike traditional ML, where datasets from multiple sources are consolidated into a single location, FL keeps the data in place. Each node (or client) in a distributed network (such as a hospital or research center) trains a shared global model exclusively on its local data.

A central server orchestrates the process: it collects only model updates (parameters or gradients) from each client and aggregates them to refine the global model, without ever accessing the underlying raw data (1).

This process unfolds in a series of clearly defined stages:

Model Initialization: The central server creates and distributes a global ML model to participating clients, these may include hospitals, research labs, or even edge devices.

Local Training: Each client trains the model locally on its own datasets, following standard neural network training principles.

Parameter Transmission: Once local training concludes, clients send only updated parameters or gradients (not raw data or fully trained models) back to the central server.

Aggregation: The server integrates these updates, often using weighted averaging methods such as Federated Averaging (FedAvg), to improve the global model.

Iteration: The updated global model is redistributed to all clients, initiating a new training round. This loop continues until the model converges or predefined termination criteria are met (2).

The core strength of FL lies in its ability to preserve privacy by design, a principle that makes it particularly valuable in healthcare, where data centralization often creates legal, ethical, and operational barriers.

Relevance for Whole-Slide Images

In digital pathology, the application of FL is particularly compelling. Whole-slide images are vast gigapixel datasets rich in diagnostic value yet highly sensitive under privacy laws (3).

For example, imagine a consortium of hospitals collaborating to develop an AI model for breast cancer detection in WSIs. Each hospital, acting as a client node, trains the model locally on its own patient slides. Instead of sending those images to a central repository, each hospital transmits only the model’s learned parameters to the central server.

The server aggregates these updates into a stronger, more generalizable global model, which is then redistributed to all participating hospitals for further local training. The end result: a model trained on a vastly more diverse dataset without a single raw image crossing institutional boundaries (1).

Weakly Supervised Multiple Instance Learning (MIL)

One of the most relevant use cases for FL in pathology is weakly supervised Multiple Instance Learning. This approach is particularly suited to WSI datasets, where detailed pixel-level annotations are scarce.

In this framework, a WSI is treated as a “bag” of smaller image patches. The model learns from slide-level or patient-level labels (which are easier to obtain) rather than from exhaustive pixel-by-pixel annotations.

Patches are converted into feature vectors, and only these vectors are used for local training. Model parameters are then aggregated centrally. For an additional privacy safeguard, differential privacy can be applied by adding controlled noise to parameters before transmission (3).

The Scale Challenge

FL is not just a privacy solution, it addresses a critical scalability challenge. The size of WSIs is staggering: a single slide can match or exceed the storage volume of the entire ImageNet dataset.

Centralizing and transferring such datasets is prohibitively expensive and operationally impractical. FL solves this “data paradox” by enabling in-place collaborative learning across distributed datasets, making large-scale AI development feasible without massive storage or bandwidth investments.

This democratizes computational pathology research, allowing smaller institutions to contribute without needing the infrastructure of large academic medical centers (2).

A Framework for Distributed Trust

Perhaps the most overlooked advantage of FL is its ability to foster distributed trust. Institutions that might otherwise be reluctant (or legally unable) to collaborate can now do so without exposing raw patient data (4).

This makes FL more than a technical innovation, it is a strategic enabler for multi-institutional research and AI deployment in healthcare. Here, privacy, compliance, and trust are not afterthoughts, they are built into the system’s DNA.

1.2 Key Advantages of Federated Learning

Federated Learning (FL) brings a set of substantial benefits, particularly relevant to healthcare and digital pathology.

The most prominent advantage is enhanced data privacy and security. FL ensures that raw data never leaves its original location(5), dramatically reducing the risk of exposing personally identifiable information (PII) or protected health information (PHI) during transmission or storage (1). This is a critical feature in hospital environments, where patient information is highly sensitive and subject to strict regulatory oversight (1).

Here, privacy is not merely a technical specification, it is a core enabler of collaboration in the healthcare sector. Without FL, integrating large, diverse medical datasets from multiple institutions would be nearly impossible from both a legal and logistical standpoint. By keeping sensitive data in place, FL turns what was once a regulatory roadblock into an opportunity for collaborative innovation (6).

Another decisive advantage is access to large and diverse distributed datasets. FL allows training on massive volumes of data that would otherwise remain locked within institutional silos (1). This diversity (stemming from different patient populations, equipment, and clinical protocols) produces AI models that are more robust and generalizable (3).

Such models are less prone to bias and demonstrate stronger performance in real-world clinical settings. This multiplicative effect of data diversity is particularly important in the context of rare diseases, where no single institution can generate enough cases for effective model training (7). By combining heterogeneous datasets through FL, the resulting global model achieves a level of generalization that even large institutions with extensive local datasets could not reach on their own.

From an efficiency and latency perspective, FL removes the need to transfer large volumes of raw data to a central server(1). This significantly reduces both latency and bandwidth requirements for ML training (an especially relevant factor when working with gigapixel WSIs)(3). Efficiency here extends beyond network speed to include reduced infrastructure costs for storage and large-scale data transfer.

To illustrate: a single WSI can occupy 2.5 GB, and a large center can generate up to 1.1 petabytes of data per year (8). Without FL, accommodating such volumes would demand an expensive, centralized big data infrastructure. By avoiding this requirement, FL makes AI development more accessible to institutions with limited resources (4).

Finally, FL supports improved regulatory compliance. Its decentralized architecture helps healthcare organizations meet stringent data protection requirements under GDPR and HIPAA, as confidential information never leaves the local node. This inherently simplifies the development of AI models that meet compliance standards, reducing associated legal risks and accelerating adoption in regulated clinical environments(1,6).

1.3 Limitations Compared to Centralized Learning

Despite its notable advantages, Federated Learning (FL) also presents significant challenges and limitations when compared to centralized approaches, particularly in complex environments such as hospitals. The same decentralization that gives FL its primary privacy advantage can, paradoxically, introduce a range of technical and operational complexities.

One of the most prominent challenges is data heterogeneity (non-IID data). In practice, datasets at client nodes are rarely independent and identically distributed. Differences in patient populations, scanner hardware, or staining protocols often result in substantial variability between clients’ data distributions (3). This disparity can lead to suboptimal performance of the global model or slower convergence, as local models may overfit to their institution’s data and fail to generalize effectively to the aggregated dataset.

Another limitation is local computational cost. Each client must have sufficient processing capacity to train the model locally, something that can be a serious barrier for resource-constrained devices or for large, complex models (2).

While FL removes the need to transfer raw datasets, it still incurs communication overhead. The iterative exchange of model parameters between clients and the central server can place considerable load on network bandwidth, especially with large models or when involving a high number of clients (1).

Synchronization and latency also represent critical operational factors. Coordinating multiple nodes is inherently complex due to variable network latency, unstable connectivity, and differences in computational power among clients. This can lead to the so-called straggler effect, where slower devices delay aggregation rounds, producing stale updates that negatively impact both training efficiency and model convergence (9). This is a direct consequence of decentralization, the very characteristic that resolves privacy concerns also creates a new set of engineering and algorithmic challenges.

From a security perspective, FL is not immune to adversarial attacks. Malicious participants could inject poisoned data into their local models or manipulate model updates before transmitting them to the central server, compromising the integrity and reliability of the global model (1).

Implementation complexity is another factor to consider. Setting up and managing a distributed FL system is inherently more complex than deploying a centralized architecture. It demands careful planning to address technical and operational challenges(6). Overcoming these limitations often requires advanced countermeasures some of which reintroduce computational burden or cost. For example, homomorphic encryption can protect parameter updates during transmission, but it is computationally expensive (10).

In terms of raw performance, while FL can match traditional ML accuracy in certain scenarios under controlled conditions (11), unmanaged data heterogeneity can cause lower accuracy or unstable convergence (12). In many digital pathology applications, the adoption of FL is driven less by inherent performance superiority and more by the practical impossibility of centralizing data. The trade-off between privacy and performance remains a constant considerationin its development and deployment(11).

2. Clinical Applications and Documented Use Cases (2022–2025)

Federated Learning (FL) has shown transformative potential in digital pathology, supported by a growing body of proof-of-concept studies and pilot projects that validate its effectiveness between 2022 and 2025.

2.1 Diagnosis and Prognosis in Digital Pathology

In digital pathology, FL applications focus on developing AI models to enhance diagnostic accuracy, improve prognostic prediction, and support risk stratification, particularly in oncology. The ability to train models on distributed datasets without compromising privacy is critical to addressing both the scarcity of large annotated datasets and the inherent heterogeneity of clinical samples.